And yeah, the results shocked even me.

I’ve written Java for 15 years. From EJBs to Spring Boot, from XML to annotations, I’ve seen it all.

And like many long-time Java developers, I’ve built up muscle memory — and mental baggage. I knew what worked. I knew what hurt. And over time… I stopped questioning.

But one night, I had this thought:

What if I threw away everything I knew — and rewrote Java like it was designed for 2025, not 2005?

Not just switching syntax. I mean going full force:

- Modern concurrency with Loom

- Instant startup with CRaC

- Embedded AI copilots

- Native builds that rival Go

- Testcontainers, Observability, Sealed types… the whole shebang

So I did it. One week. No legacy habits. Just the cleanest, sharpest tools 2025 Java has to offer.

And the results?

Some parts felt like cheating. Some felt like freedom. And some — frankly — shocked me.

Here’s exactly what I changed — and what I learned.

1. Switched to Virtual Threads — Everything Changed

I’ve written Java long enough to know one thing:

Threads have always been the bottleneck.

You open 5,000 concurrent connections?

You either overload the OS, or you build a complex async/reactive mess.

For years, I managed thread pools like this:

ExecutorService executor = Executors.newFixedThreadPool(100);

Tweak it too small? Requests queue up.

Too big? RAM spikes. Context switches explode. You get the idea.

Then I tried this:

var executor = Executors.newVirtualThreadPerTaskExecutor();

Same scraper. 5000 URLs. One run.

No drama. No thread starvation. No tuning.

It just worked — and used less memory than my 2K-threads test before.

Virtual threads are JVM-managed, not OS-managed.

They suspend on I/O without blocking real threads.

Your code stays synchronous and clean — but handles thousands of tasks.

Java 21 made it real. Project Loom finally delivered.

No more callbacks. No more CompletableFuture pyramids. No more tuning thread pools.

After years of managing threads manually, Virtual Threads felt like cheating.

I wrote less code, got more performance — and never looked back.

2. Tried CRaC — Cold Starts Became Instant

Let’s face it — Java apps are fast after they warm up.

But that warm-up? Painful. Especially in cloud environments where cold starts = user drop-off.

I’ve seen REST APIs that take 10+ seconds to boot.

Spring logs flying past like a rocket launch — just to say “Hello, world.”

Then I tried CRaC — Coordinated Restore at Checkpoint.

It’s a JVM-level snapshot system: you start your app once, take a snapshot, and the next time it boots… it resumes from that exact state. Instantly.

java -XX:CRaCCheckpointTo=./checkpoint -jar my-app.jar # Later... java -XX:CRaCRestoreFrom=./checkpoint

I tested it with Spring Boot 3.2 + Azul Zulu JDK.

First launch: ~6.5s

Restored launch: <100ms

Yes, under 100ms — without rewriting a single line of business logic.

Setup Was Surprisingly Simple:

- Add

org.cracdependency - Use supported JDK (Azul Zulu 17+ or 21)

- Enable CRIU (Linux only, for now)

- Configure Spring Boot with

-Dspring.context.checkpoint=onRefresh

It works on native-like speed — but still runs on the JVM. You keep introspection, GC, debugging, everything.

CRaC is like save points for your Java app.

No warm-up. No logs marathon. Just instant readiness — every time.

3. Built with Micronaut + GraalVM — Cold Start in 50ms, No Joke

While CRaC gives traditional JVM apps a second life with faster cold starts, there’s another path that skips the JVM warm-up entirely: native compilation with Micronaut + GraalVM.

Spring Boot apps are solid — until you run them on cloud.

Startup takes 5–10 seconds. Memory hits 200MB+.

Not ideal when you’re deploying serverless functions or spinning up short-lived containers.

Micronaut, a modern framework built for AOT. Paired it with GraalVM Native Image, and compiled my app into a native binary.

./gradlew nativeCompile

The result?

- Cold start: 50ms (measured with a stopwatch)

- Memory: ~40MB, down from 200MB+

- No JVM warm-up. No classpath scanning. Just instant execution.

I didn’t rewrite business logic.

Micronaut’s DI, HTTP clients, and database integrations worked right away.

Tooling was great too — VS Code extension handled project creation, native builds, Docker, even Kubernetes deploys.

GraalVM + Micronaut made my Java app feel like a Go binary — without leaving the JVM mindset.

4. Embraced Records + Sealed Classes — And My Domain Models Finally Made Sense

Java has always made it possible to write good domain models — but never easy.

We stitched together abstract classes, verbose DTOs, and instanceof blocks just to describe something like:

“A shape is either a circle or a rectangle.”

Now, with Records (Java’s product types) and Sealed Classes (sum types), this kind of modeling is built in.

sealed interface Shape {

record Circle(Point center, int radius) implements Shape {}

record Rectangle(Point lowerLeft, Point upperRight) implements Shape {}

}

That’s it.

No boilerplate.

No getters/setters.

And now with pattern matching in switch, we get exhaustiveness checks for free:

return switch (shape) {

case Circle c -> compute(c);

case Rectangle r -> compute(r);

};

No default needed — the compiler knows we’ve handled every case.

This is real algebraic data types in Java. And they feel natural.

I even rewrote an old Future.get() API using sealed classes:

Instead of throwing 4 types of exceptions, I returned a sealed result — Success, Failure, Timeout, or Cancelled.

Cleaner. Safer. Easier to reason about.

Records and sealed classes made my models simpler and smarter.

Java finally speaks the language of logic, not just syntax.

5. Replaced JUnit with Testcontainers + AssertJ — And Finally Trusted My Tests

I used to fake everything in tests.

Mocked the database. Stubbed out Redis. Wrote assertEquals() and hoped it wouldn’t break in prod.

But let’s be honest — unit tests don’t tell the whole story.

In 2025, I wanted tests that behave like reality. So I switched to Testcontainers.

@Container static PostgreSQLContainer<?> postgres = new PostgreSQLContainer<>(postgres:14);

That’s a real Postgres instance.

Spun up in Docker. Wired into my tests. Reset between runs.

Then I swapped out brittle JUnit assertions with AssertJ — fluent, readable, expressive:

assertThat(user.getEmail()).contains(@);

Suddenly, my tests felt like documentation.

They didn’t just pass — they proved things worked across layers.

With Testcontainers and AssertJ, I stopped mocking reality — and started testing it.

6. Let Copilot Write the Boilerplate — So I Could Focus on the Logic

I used to spend hours writing boilerplate.

Getters. Setters. DTOs. Unit tests. Mapping layers.

Most of it felt like typing for the sake of typing.

Then I let Copilot step in.

Now I type record User(...), and the class is done before I finish the parentheses.

I start a test class, and Copilot scaffolds the whole structure — mocks, method stubs, even names.

It’s not always perfect. I still review. I still edit.

But what used to take an hour now takes 10 minutes — and my brain stays fresh for the hard stuff.

I don’t hand-code boilerplate anymore.

I delegate it — and spend my time where it matters.

And honestly? It feels like I hired a junior dev — who never gets tired.

7. Adopted the Java Module System — Classpath Chaos Finally Ended

I’ve shipped more than one Java monolith that exploded into JAR-hell:

- 300 MB fat-JARs

- Shadowed classes, mysterious

NoClassDefFoundErrors - Half a day tweaking

-classpathjust to boot the app

So I bit the bullet and added module-info.java.

module com.acme.orders {

requires com.acme.inventory;

exports com.acme.orders.api;

}

Now every package is either exported or truly hidden.

The compiler checks dependencies; the runtime refuses split packages.

My build shrank from 300 MB to six focused modules — each ~40 MB — and start-up scans dropped by 30 %.

Refactoring a shared util class?

The IDE tells me exactly which modules break before I hit mvn test.

CI failures for missing transitive libs? Gone.

Java modules are like vegetables — pain to start, but everything runs healthier once you swallow them.

8. Switched from Micrometer to OpenTelemetry — and Finally Understood What Observability Means

For most of my Java career, I thought metrics meant Micrometer.

You wire it up, add some counters, pipe them to Prometheus, and voilà — you’re “monitoring”.

But here’s the truth:

Micrometer gives you numbers. OpenTelemetry gives you answers.

I migrated one Spring Boot service from Micrometer to OpenTelemetry. Same app, same metrics.

But now I also had traces, context, logs — all stitched together.

When an API started slowing down, I didn’t just see “latency up 45%”.

I saw:

- Which downstream service was bottlenecked

- How long Redis took in the request chain

- What exact span the slowdown occurred in

All in the same dashboard.

Yes, OpenTelemetry has a steeper learning curve.

But it’s like switching from looking through a peephole…

to walking into the entire building.

If all you need are JVM-level counters, Micrometer is fine.

If you’re debugging real issues in real systems, OpenTelemetry changes the game.

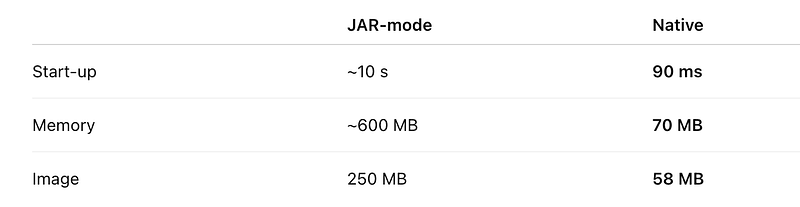

9. Turned Spring Boot 3 into a GraalVM Native Image — and Watched a 10 s Boot Shrink to 90 ms

If you’re already deep in the Spring ecosystem?

You don’t have to switch frameworks to go native — Spring Boot 3 now ships with first-class support for GraalVM Native Image.

Spring Boot has always felt like hauling a grand piano onto Kubernetes:

fat JARs (250 MB), 10-second cold starts, 600 MB RSS when the traffic spikes.

Then Spring Boot 3 shipped native-image support out-of-the-box.

# one command—no Dockerfile, no Makefile mvn spring-boot:build-image -Pnative

Buildpacks + GraalVM chew through AOT hints, spit out a tiny OCI image with a single binary.

My demo API went from:

Yes, reflection can bite, but Spring’s AOT engine does 90 % of the heavy lift.

Missing metadata? Run once with the tracing agent; hints autogenerate.

Now that binary slides into Lambda, Cloud Run, even edge nodes — no JVM to warm, no GC pauses at boot.

Spring isn’t “heavy” anymore.

One flag, one binary, and your old monolith sprints like a Go service.

10. Structured Concurrency Made My Async Code… Not Suck

I used to write async Java code like a spider on caffeine — CompletableFutures everywhere, tangled callbacks, leaked threads, and zero guarantees on cleanup.

It “worked” — until it didn’t.

Then I met Structured Concurrency.

Introduced as part of Project Loom, it flips async logic on its head.

Now my concurrent tasks are:

- Scoped to their parent

- Auto-cancelled on failure

- Easier to reason about than

try-finallychains

try (var scope = StructuredTaskScope.ShutdownOnFailure.create()) {

Future<String> user = scope.fork(() -> fetchUser());

Future<String> posts = scope.fork(() -> fetchPosts());

scope.join(); // Wait for both

scope.throwIfFailed(); // Rethrow if any failed

return user.result() + posts.result();

}

No more dangling threads. No more “what if this fails silently?”

For the first time in years, my concurrent code actually reads like synchronous code — and behaves like it, too.

Structured Concurrency didn’t just clean up my code.It cleaned up my thinking.

Final Thoughts

I’ve been a Java dev for over a decade.

I’ve seen the dark days of XML config, servlet hell, and public static void main(String[] args).

But 2025 is different.

Java’s not just alive — it’s thriving. It’s leaner, faster, smarter. It’s fun again.

If you gave up on Java, now’s the time to come back.

And if you’re still here?

Welcome to the best version of Java we’ve ever had.

Let’s build.